The Curse of Recursion: Training on Generated Data Makes Models Forget

2305.17493

170

0

🏋️

Abstract

Stable Diffusion revolutionised image creation from descriptive text. GPT-2, GPT-3(.5) and GPT-4 demonstrated astonishing performance across a variety of language tasks. ChatGPT introduced such language models to the general public. It is now clear that large language models (LLMs) are here to stay, and will bring about drastic change in the whole ecosystem of online text and images. In this paper we consider what the future might hold. What will happen to GPT-{n} once LLMs contribute much of the language found online? We find that use of model-generated content in training causes irreversible defects in the resulting models, where tails of the original content distribution disappear. We refer to this effect as Model Collapse and show that it can occur in Variational Autoencoders, Gaussian Mixture Models and LLMs. We build theoretical intuition behind the phenomenon and portray its ubiquity amongst all learned generative models. We demonstrate that it has to be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of content generated by LLMs in data crawled from the Internet.

Get summaries of the top AI research delivered straight to your inbox:

Overview

- The paper explores the potential impact of large language models (LLMs) like GPT-3 and ChatGPT on the future of online content and the models themselves.

- It introduces the concept of "Model Collapse," where using model-generated content in training can lead to irreversible issues in the resulting models.

- The paper aims to build theoretical intuition around this phenomenon and demonstrate its ubiquity across different generative models, including Variational Autoencoders and Gaussian Mixture Models.

Plain English Explanation

The rapid advancements in large language models (LLMs) like GPT-3 and GPT-4 have revolutionized the way we create and interact with online text and images. However, as these models become more prevalent, the paper explores what might happen when they start contributing a significant portion of the language found online.

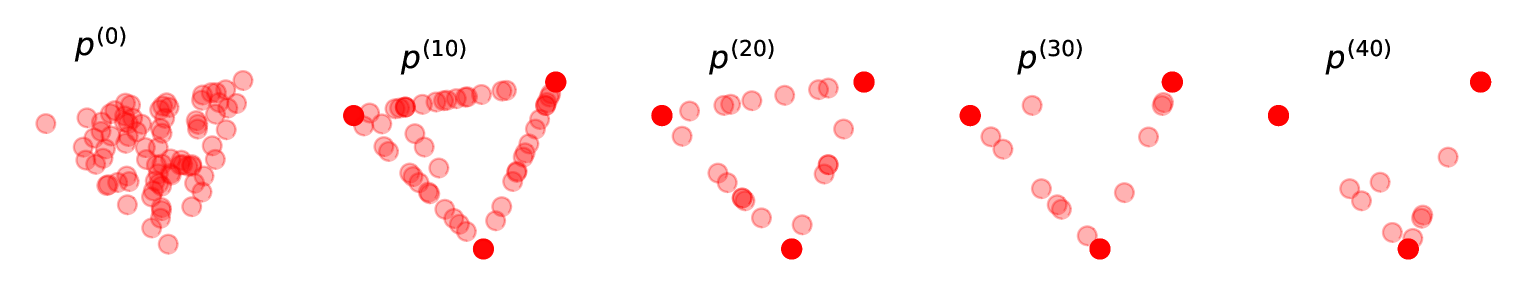

The key concern is a phenomenon the authors call "Model Collapse." When LLMs are trained on content that was previously generated by other models, it can lead to irreversible issues in the new models. Certain unique or rare elements of the original data distribution disappear, causing the models to become less diverse and representative of genuine human-generated content.

This effect is not limited to just LLMs; the paper shows that it can occur in other generative models like Variational Autoencoders and Gaussian Mixture Models. The authors provide a theoretical explanation for why this happens and demonstrate the ubiquity of the problem across different types of learned generative models.

The implication is that, as LLMs become more prevalent, the value of data collected from genuine human interactions with these systems will become increasingly important. The data used to train these models must be carefully curated to avoid the pitfalls of Model Collapse and ensure the models continue to provide the benefits we've come to expect from large language models.

Technical Explanation

The paper explores the potential impact of large language models (LLMs) on the future of online content and the models themselves. It introduces the concept of "Model Collapse," where using model-generated content in training can lead to irreversible issues in the resulting models.

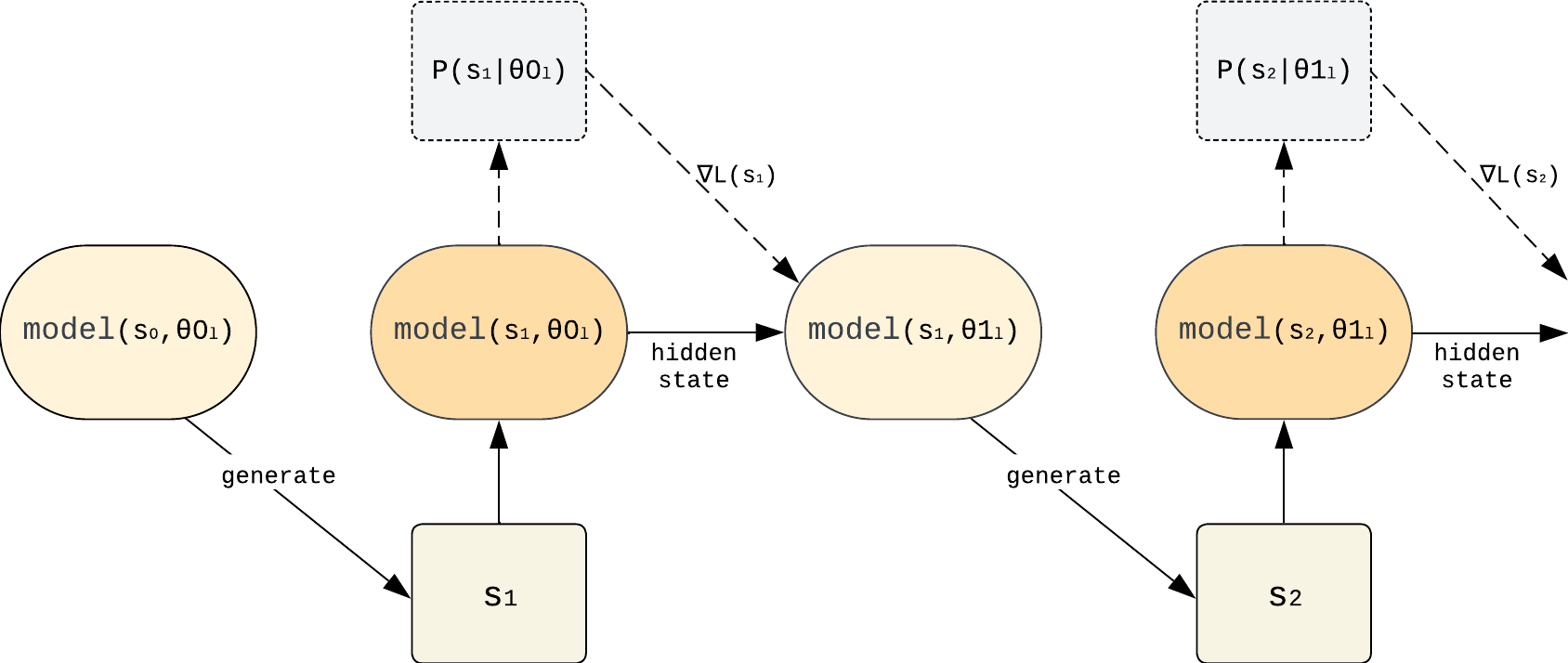

The authors demonstrate that this phenomenon can occur in a variety of generative models, including Variational Autoencoders, Gaussian Mixture Models, and LLMs. They provide a theoretical explanation for why Model Collapse happens, showing that it is a fundamental issue that arises from the recursive nature of training on synthetic data.

Through a series of experiments, the paper illustrates the ubiquity of Model Collapse across different model architectures and datasets. The authors show that as the proportion of model-generated content in the training data increases, the resulting models exhibit a loss of diversity and the disappearance of unique or rare elements from the original data distribution.

The implications of this research are significant, as LLMs like GPT-3 and ChatGPT continue to transform the way we create and interact with online content. The paper suggests that the value of data collected from genuine human interactions will become increasingly important in sustaining the benefits of these powerful models.

Critical Analysis

The paper provides a compelling and well-researched exploration of the potential pitfalls of using model-generated content to train large language models. The authors' theoretical explanation for Model Collapse is convincing and backed by experimental evidence across multiple model types.

One potential limitation of the research is the lack of a clear solution or mitigation strategy for the problem. While the paper highlights the importance of curating training data to avoid Model Collapse, it does not offer specific recommendations or techniques for doing so. Further research in this area could be valuable.

Additionally, the paper does not explore the potential societal implications of Model Collapse in LLMs. As these models become more prevalent in tasks like machine translation, the consequences of biased or unrepresentative language models could be far-reaching and merit additional consideration.

Overall, the paper makes a significant contribution to our understanding of the challenges facing large language models as they become more integrated into our online ecosystem. The insights presented here should encourage researchers and practitioners to think critically about the data used to train these powerful systems and the potential unintended consequences of their widespread adoption.

Conclusion

The paper highlights a critical issue facing the future of large language models (LLMs): the phenomenon of "Model Collapse." When these models are trained on content that was previously generated by other models, it can lead to irreversible defects, where unique or rare elements of the original data distribution disappear.

The authors demonstrate that this problem is not limited to just LLMs, but can occur in a variety of generative models, including Variational Autoencoders and Gaussian Mixture Models. By providing a theoretical explanation and empirical evidence for the ubiquity of Model Collapse, the paper raises important questions about the sustainability of training LLMs on data scraped from the web.

As LLMs become more prevalent in our online ecosystem, the value of data collected from genuine human interactions will become increasingly crucial. The research presented in this paper suggests that careful curation and selection of training data will be essential to maintaining the benefits and diversity of these powerful language models.

Related Papers

Collapse of Self-trained Language Models

David Herel, Tomas Mikolov

0

0

In various fields of knowledge creation, including science, new ideas often build on pre-existing information. In this work, we explore this concept within the context of language models. Specifically, we explore the potential of self-training models on their own outputs, akin to how humans learn and build on their previous thoughts and actions. While this approach is intuitively appealing, our research reveals its practical limitations. We find that extended self-training of the GPT-2 model leads to a significant degradation in performance, resulting in repetitive and collapsed token output.

4/4/2024

👁️

The Reversal Curse: LLMs trained on A is B fail to learn B is A

Lukas Berglund, Meg Tong, Max Kaufmann, Mikita Balesni, Asa Cooper Stickland, Tomasz Korbak, Owain Evans

0

0

We expose a surprising failure of generalization in auto-regressive large language models (LLMs). If a model is trained on a sentence of the form A is B, it will not automatically generalize to the reverse direction B is A. This is the Reversal Curse. For instance, if a model is trained on Valentina Tereshkova was the first woman to travel to space, it will not automatically be able to answer the question, Who was the first woman to travel to space?. Moreover, the likelihood of the correct answer (Valentina Tershkova) will not be higher than for a random name. Thus, models do not generalize a prevalent pattern in their training set: if A is B occurs, B is A is more likely to occur. It is worth noting, however, that if A is B appears in-context, models can deduce the reverse relationship. We provide evidence for the Reversal Curse by finetuning GPT-3 and Llama-1 on fictitious statements such as Uriah Hawthorne is the composer of Abyssal Melodies and showing that they fail to correctly answer Who composed Abyssal Melodies?. The Reversal Curse is robust across model sizes and model families and is not alleviated by data augmentation. We also evaluate ChatGPT (GPT-3.5 and GPT-4) on questions about real-world celebrities, such as Who is Tom Cruise's mother? [A: Mary Lee Pfeiffer] and the reverse Who is Mary Lee Pfeiffer's son?. GPT-4 correctly answers questions like the former 79% of the time, compared to 33% for the latter. Code available at: https://github.com/lukasberglund/reversal_curse.

4/8/2024

Is Model Collapse Inevitable? Breaking the Curse of Recursion by Accumulating Real and Synthetic Data

Matthias Gerstgrasser, Rylan Schaeffer, Apratim Dey, Rafael Rafailov, Henry Sleight, John Hughes, Tomasz Korbak, Rajashree Agrawal, Dhruv Pai, Andrey Gromov, Daniel A. Roberts, Diyi Yang, David L. Donoho, Sanmi Koyejo

0

0

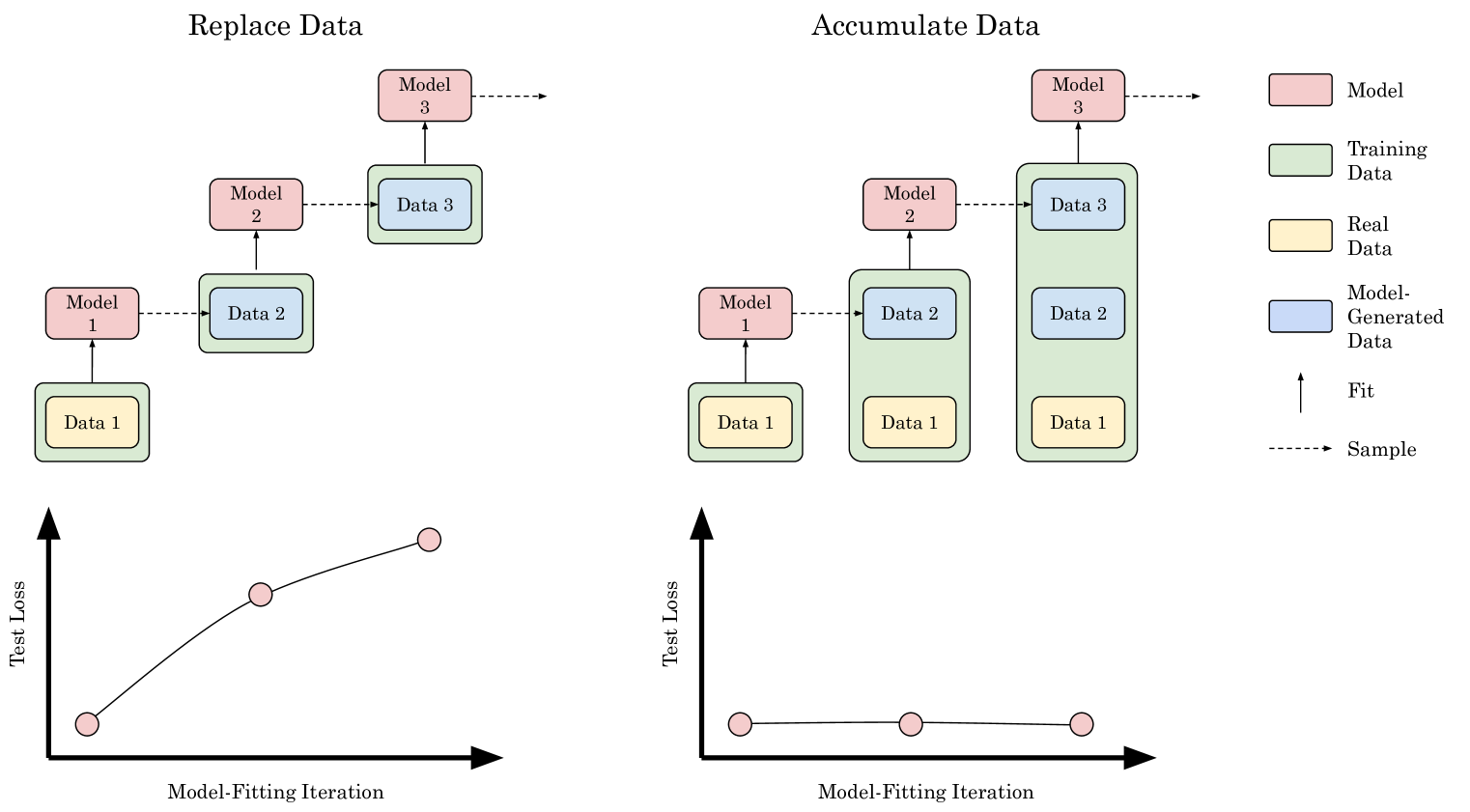

The proliferation of generative models, combined with pretraining on web-scale data, raises a timely question: what happens when these models are trained on their own generated outputs? Recent investigations into model-data feedback loops proposed that such loops would lead to a phenomenon termed model collapse, under which performance progressively degrades with each model-data feedback iteration until fitted models become useless. However, those studies largely assumed that new data replace old data over time, where an arguably more realistic assumption is that data accumulate over time. In this paper, we ask: what effect does accumulating data have on model collapse? We empirically study this question by pretraining sequences of language models on text corpora. We confirm that replacing the original real data by each generation's synthetic data does indeed tend towards model collapse, then demonstrate that accumulating the successive generations of synthetic data alongside the original real data avoids model collapse; these results hold across a range of model sizes, architectures, and hyperparameters. We obtain similar results for deep generative models on other types of real data: diffusion models for molecule conformation generation and variational autoencoders for image generation. To understand why accumulating data can avoid model collapse, we use an analytically tractable framework introduced by prior work in which a sequence of linear models are fit to the previous models' outputs. Previous work used this framework to show that if data are replaced, the test error increases with the number of model-fitting iterations; we extend this argument to prove that if data instead accumulate, the test error has a finite upper bound independent of the number of iterations, meaning model collapse no longer occurs.

5/1/2024

How Bad is Training on Synthetic Data? A Statistical Analysis of Language Model Collapse

Mohamed El Amine Seddik, Suei-Wen Chen, Soufiane Hayou, Pierre Youssef, Merouane Debbah

0

0

The phenomenon of model collapse, introduced in (Shumailov et al., 2023), refers to the deterioration in performance that occurs when new models are trained on synthetic data generated from previously trained models. This recursive training loop makes the tails of the original distribution disappear, thereby making future-generation models forget about the initial (real) distribution. With the aim of rigorously understanding model collapse in language models, we consider in this paper a statistical model that allows us to characterize the impact of various recursive training scenarios. Specifically, we demonstrate that model collapse cannot be avoided when training solely on synthetic data. However, when mixing both real and synthetic data, we provide an estimate of a maximal amount of synthetic data below which model collapse can eventually be avoided. Our theoretical conclusions are further supported by empirical validations.

4/9/2024