NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models

2403.03100

0

9

Abstract

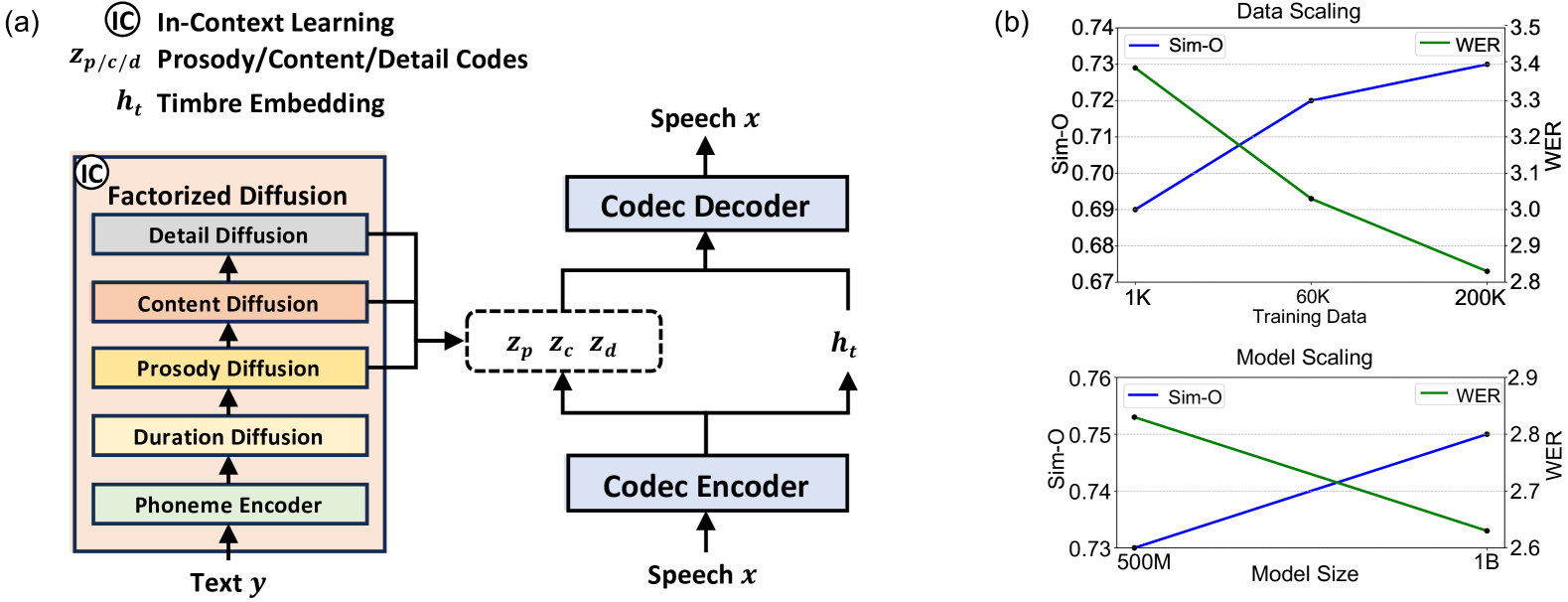

While recent large-scale text-to-speech (TTS) models have achieved significant progress, they still fall short in speech quality, similarity, and prosody. Considering speech intricately encompasses various attributes (e.g., content, prosody, timbre, and acoustic details) that pose significant challenges for generation, a natural idea is to factorize speech into individual subspaces representing different attributes and generate them individually. Motivated by it, we propose NaturalSpeech 3, a TTS system with novel factorized diffusion models to generate natural speech in a zero-shot way. Specifically, 1) we design a neural codec with factorized vector quantization (FVQ) to disentangle speech waveform into subspaces of content, prosody, timbre, and acoustic details; 2) we propose a factorized diffusion model to generate attributes in each subspace following its corresponding prompt. With this factorization design, NaturalSpeech 3 can effectively and efficiently model intricate speech with disentangled subspaces in a divide-and-conquer way. Experiments show that NaturalSpeech 3 outperforms the state-of-the-art TTS systems on quality, similarity, prosody, and intelligibility, and achieves on-par quality with human recordings. Furthermore, we achieve better performance by scaling to 1B parameters and 200K hours of training data.

Get summaries of the top AI research delivered straight to your inbox:

Overview

- This paper introduces NaturalSpeech 3, a new zero-shot speech synthesis system that uses factorized codec and diffusion models to generate high-quality speech without needing any target speaker data.

- The key innovations are the use of a factorized codec, which separates the speech signal into independent linguistic and speaker-specific components, and a diffusion-based generative model that can synthesize speech from these disentangled representations.

- The authors demonstrate that NaturalSpeech 3 can generate speech in new voices with high fidelity, outperforming previous zero-shot and few-shot speech synthesis approaches.

Plain English Explanation

In this paper, the researchers present NaturalSpeech 3, a new AI system that can generate human-like speech without needing any recordings from the target speaker. This is known as "zero-shot" speech synthesis.

The core idea behind NaturalSpeech 3 is to break down the speech signal into two separate components: one that captures the linguistic content (the words and how they are spoken), and another that captures the speaker's unique voice characteristics. By modeling these components independently, the system can then generate new speech in any voice, even if it has never heard that speaker's voice before.

To do this, the researchers use a "factorized codec" - a type of neural network that can extract these linguistic and speaker-specific features from audio. They then train a "diffusion model", another type of neural network, to generate new speech by recombining these disentangled representations.

The end result is a system that can synthesize high-quality speech in novel voices, outperforming previous zero-shot and few-shot speech synthesis approaches. This could have applications in areas like voice-based assistants, audio-book narration, and dubbing for films and TV shows.

Technical Explanation

The key technical innovations in NaturalSpeech 3 are the use of a factorized codec and a diffusion-based generative model.

The factorized codec is a neural network that decomposes the speech signal into two separate latent representations: one capturing the linguistic content, and another capturing the speaker-specific characteristics. This disentanglement allows the system to generate speech in new voices without needing any target speaker data.

The diffusion model is then trained to generate new speech by recombining these linguistic and speaker-specific features. Diffusion models work by progressively adding noise to the input data, then learning to reverse this noising process to generate new samples. This approach has been shown to produce high-fidelity outputs for tasks like text-to-speech and zero-shot speech editing.

The authors evaluate NaturalSpeech 3 on a range of zero-shot and few-shot speech synthesis benchmarks, demonstrating that it outperforms previous state-of-the-art methods in terms of speech quality and speaker similarity. They also show that the factorized representations learned by the codec model are meaningful and disentangled.

Critical Analysis

One potential limitation of the NaturalSpeech 3 approach is the reliance on a large, high-quality speech dataset for training the factorized codec and diffusion models. In real-world applications, such datasets may not always be readily available, which could limit the system's applicability.

Additionally, the paper does not explore the robustness of the system to noisy or low-quality input data, which would be an important consideration for real-world deployment. Further research into the model's ability to handle diverse and challenging acoustic conditions would be valuable.

Overall, however, the NaturalSpeech 3 system represents a significant advancement in zero-shot speech synthesis, with the potential to enable new applications and experiences in areas like virtual assistants, audio production, and language learning.

Conclusion

The NaturalSpeech 3 system introduces a novel approach to zero-shot speech synthesis, leveraging a factorized codec and diffusion models to generate high-quality speech in new voices without requiring any target speaker data. This work builds on and advances the state-of-the-art in zero-shot text-to-speech and few-shot voice conversion, and could have significant implications for a wide range of applications involving synthetic speech. While the system has some limitations, the core ideas and techniques presented in this paper represent an important step forward in the field of speech synthesis.

Related Papers

🗣️

FlashSpeech: Efficient Zero-Shot Speech Synthesis

Zhen Ye, Zeqian Ju, Haohe Liu, Xu Tan, Jianyi Chen, Yiwen Lu, Peiwen Sun, Jiahao Pan, Weizhen Bian, Shulin He, Qifeng Liu, Yike Guo, Wei Xue

0

0

Recent progress in large-scale zero-shot speech synthesis has been significantly advanced by language models and diffusion models. However, the generation process of both methods is slow and computationally intensive. Efficient speech synthesis using a lower computing budget to achieve quality on par with previous work remains a significant challenge. In this paper, we present FlashSpeech, a large-scale zero-shot speech synthesis system with approximately 5% of the inference time compared with previous work. FlashSpeech is built on the latent consistency model and applies a novel adversarial consistency training approach that can train from scratch without the need for a pre-trained diffusion model as the teacher. Furthermore, a new prosody generator module enhances the diversity of prosody, making the rhythm of the speech sound more natural. The generation processes of FlashSpeech can be achieved efficiently with one or two sampling steps while maintaining high audio quality and high similarity to the audio prompt for zero-shot speech generation. Our experimental results demonstrate the superior performance of FlashSpeech. Notably, FlashSpeech can be about 20 times faster than other zero-shot speech synthesis systems while maintaining comparable performance in terms of voice quality and similarity. Furthermore, FlashSpeech demonstrates its versatility by efficiently performing tasks like voice conversion, speech editing, and diverse speech sampling. Audio samples can be found in https://flashspeech.github.io/.

4/26/2024

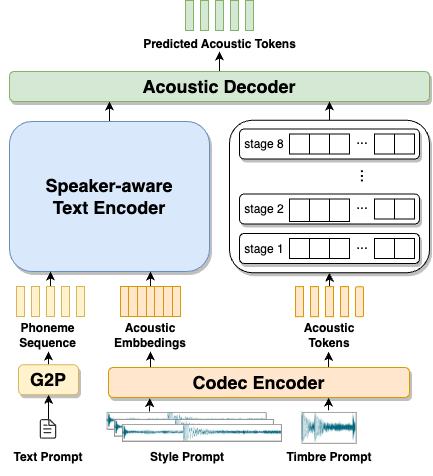

Improving Language Model-Based Zero-Shot Text-to-Speech Synthesis with Multi-Scale Acoustic Prompts

Shun Lei, Yixuan Zhou, Liyang Chen, Dan Luo, Zhiyong Wu, Xixin Wu, Shiyin Kang, Tao Jiang, Yahui Zhou, Yuxing Han, Helen Meng

0

0

Zero-shot text-to-speech (TTS) synthesis aims to clone any unseen speaker's voice without adaptation parameters. By quantizing speech waveform into discrete acoustic tokens and modeling these tokens with the language model, recent language model-based TTS models show zero-shot speaker adaptation capabilities with only a 3-second acoustic prompt of an unseen speaker. However, they are limited by the length of the acoustic prompt, which makes it difficult to clone personal speaking style. In this paper, we propose a novel zero-shot TTS model with the multi-scale acoustic prompts based on a neural codec language model VALL-E. A speaker-aware text encoder is proposed to learn the personal speaking style at the phoneme-level from the style prompt consisting of multiple sentences. Following that, a VALL-E based acoustic decoder is utilized to model the timbre from the timbre prompt at the frame-level and generate speech. The experimental results show that our proposed method outperforms baselines in terms of naturalness and speaker similarity, and can achieve better performance by scaling out to a longer style prompt.

4/10/2024

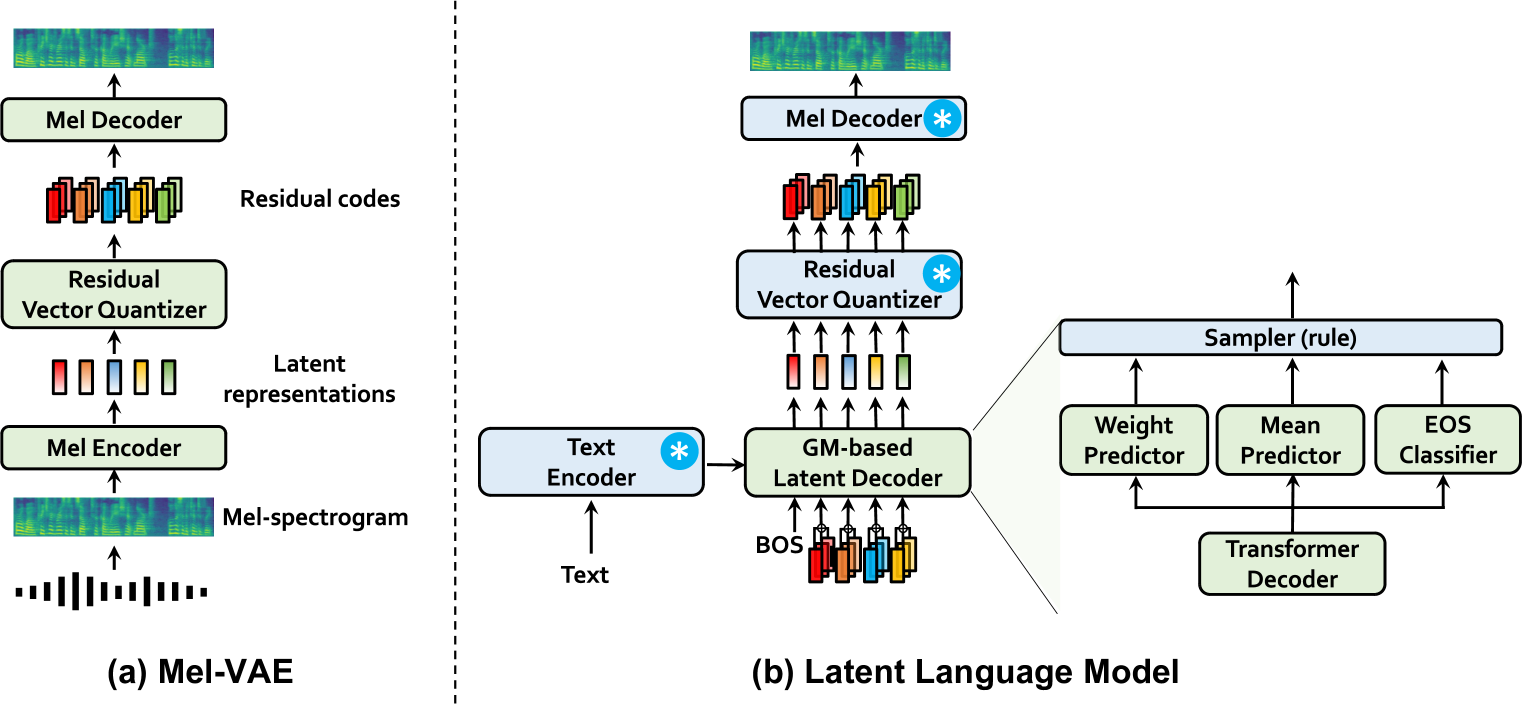

CLaM-TTS: Improving Neural Codec Language Model for Zero-Shot Text-to-Speech

Jaehyeon Kim, Keon Lee, Seungjun Chung, Jaewoong Cho

0

0

With the emergence of neural audio codecs, which encode multiple streams of discrete tokens from audio, large language models have recently gained attention as a promising approach for zero-shot Text-to-Speech (TTS) synthesis. Despite the ongoing rush towards scaling paradigms, audio tokenization ironically amplifies the scalability challenge, stemming from its long sequence length and the complexity of modelling the multiple sequences. To mitigate these issues, we present CLaM-TTS that employs a probabilistic residual vector quantization to (1) achieve superior compression in the token length, and (2) allow a language model to generate multiple tokens at once, thereby eliminating the need for cascaded modeling to handle the number of token streams. Our experimental results demonstrate that CLaM-TTS is better than or comparable to state-of-the-art neural codec-based TTS models regarding naturalness, intelligibility, speaker similarity, and inference speed. In addition, we examine the impact of the pretraining extent of the language models and their text tokenization strategies on performances.

4/4/2024

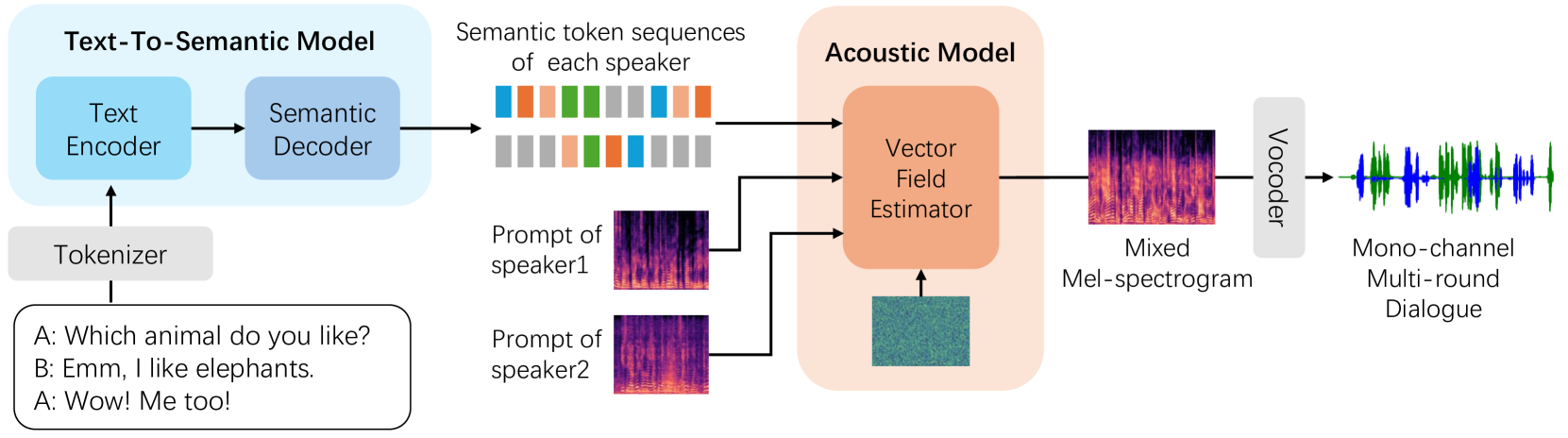

CoVoMix: Advancing Zero-Shot Speech Generation for Human-like Multi-talker Conversations

Leying Zhang, Yao Qian, Long Zhou, Shujie Liu, Dongmei Wang, Xiaofei Wang, Midia Yousefi, Yanmin Qian, Jinyu Li, Lei He, Sheng Zhao, Michael Zeng

0

0

Recent advancements in zero-shot text-to-speech (TTS) modeling have led to significant strides in generating high-fidelity and diverse speech. However, dialogue generation, along with achieving human-like naturalness in speech, continues to be a challenge in the field. In this paper, we introduce CoVoMix: Conversational Voice Mixture Generation, a novel model for zero-shot, human-like, multi-speaker, multi-round dialogue speech generation. CoVoMix is capable of first converting dialogue text into multiple streams of discrete tokens, with each token stream representing semantic information for individual talkers. These token streams are then fed into a flow-matching based acoustic model to generate mixed mel-spectrograms. Finally, the speech waveforms are produced using a HiFi-GAN model. Furthermore, we devise a comprehensive set of metrics for measuring the effectiveness of dialogue modeling and generation. Our experimental results show that CoVoMix can generate dialogues that are not only human-like in their naturalness and coherence but also involve multiple talkers engaging in multiple rounds of conversation. These dialogues, generated within a single channel, are characterized by seamless speech transitions, including overlapping speech, and appropriate paralinguistic behaviors such as laughter. Audio samples are available at https://aka.ms/covomix.

4/11/2024